Assistive Device to Recog. Expressions

Assisting Visually Impaired Individuals with Real-time Emotion Detection

In this project, I addressed a significant challenge for visually impaired individuals who often miss non-verbal emotional cues in face-to-face interactions. Without the ability to see facial expressions, they are left with limited understanding of emotional context during communication, which can hinder social interactions and emotional bonding. The goal was to create an assistive device that could help bridge this gap by recognizing and conveying facial expressions in real time.

⚠️ Challenges:

- Facial expression recognition needs to happen instantaneously, creating a need for high-performing yet lightweight models.

- Deciding the best method for conveying emotional information was non-trivial. It had to be intuitive and non-intrusive.

- Indoor and outdoor environments, presented varying challenges in terms of lighting conditions and background noise.

💡 Solutions:

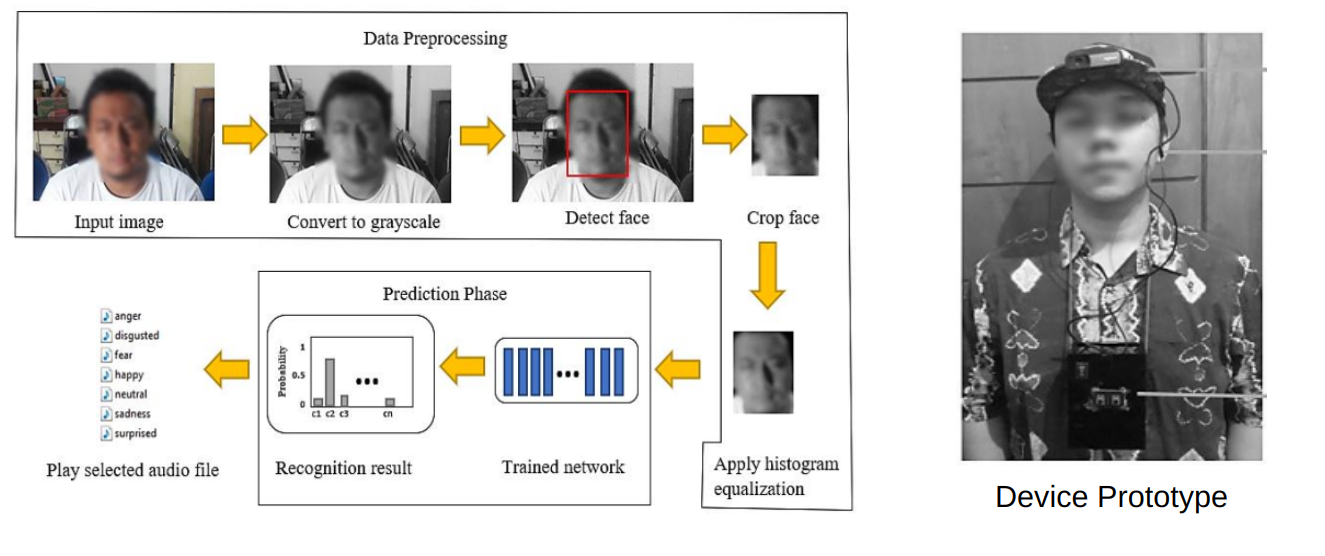

- Needed real-time facial expression recognition system adaptable to varying lighting conditions.

- Explored intuitive feedback methods to effectively communicate emotional context to visually impaired individuals.

- Designed a lightweight, wearable solution ensuring smooth interaction and enhanced usability.

🛠️ Proposed Methods:

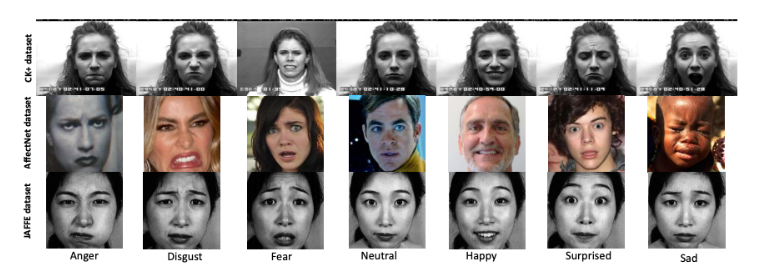

- Leveraged various facial expression datasets to ensure model robustness.

- Used auditory feedback system to communicate emotional context effectively.

- Trained a TFlite optimized model to ensure compatibility with edge devices.

- Implemented the system on a Raspberry Pi edge device with a wearable camera for real-time inference.

🎯 Results:

The results were validated through real-world testing with visually impaired participants, showing a promise in making communication more inclusive for those with limited or no sight.

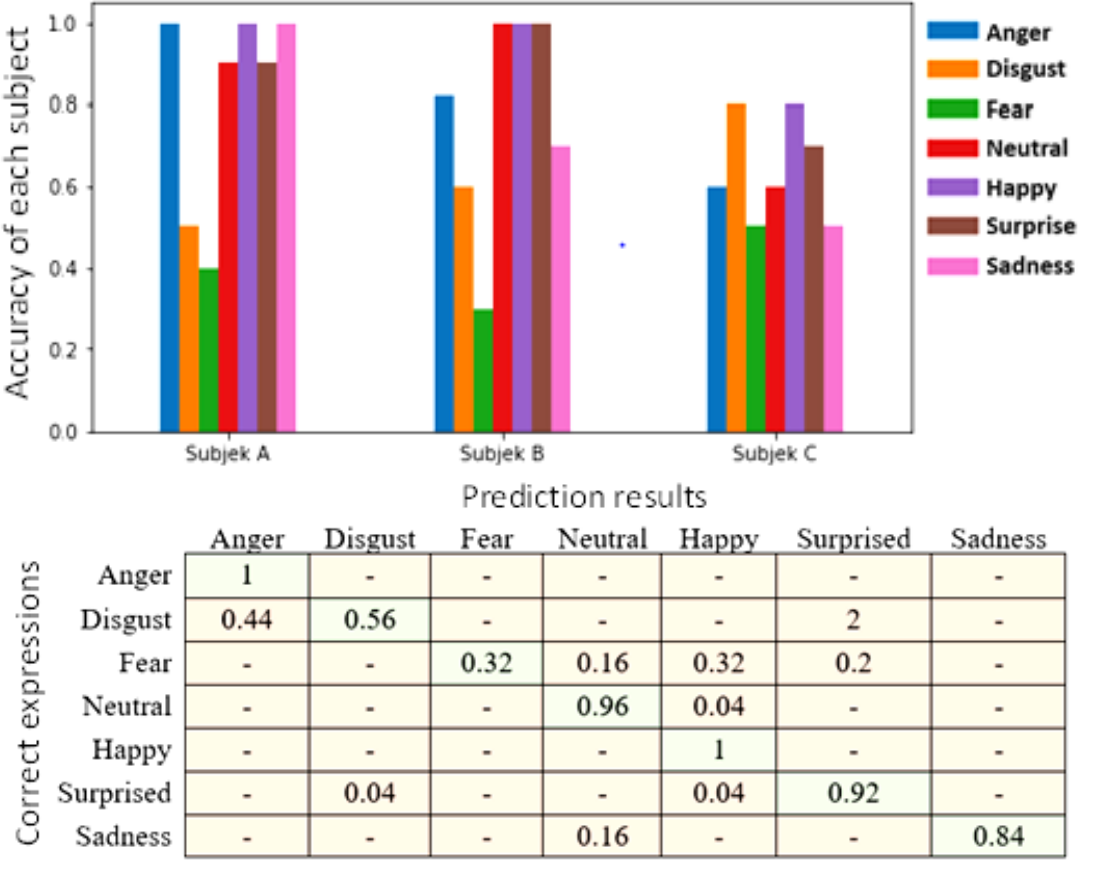

- Indoor Settings.

The user can guess the facial expressions correctly by 80% with natural room conditions without any additional lighting

The user can guess the facial expressions correctly by 80% with natural room conditions without any additional lighting - Outdoor Settings.

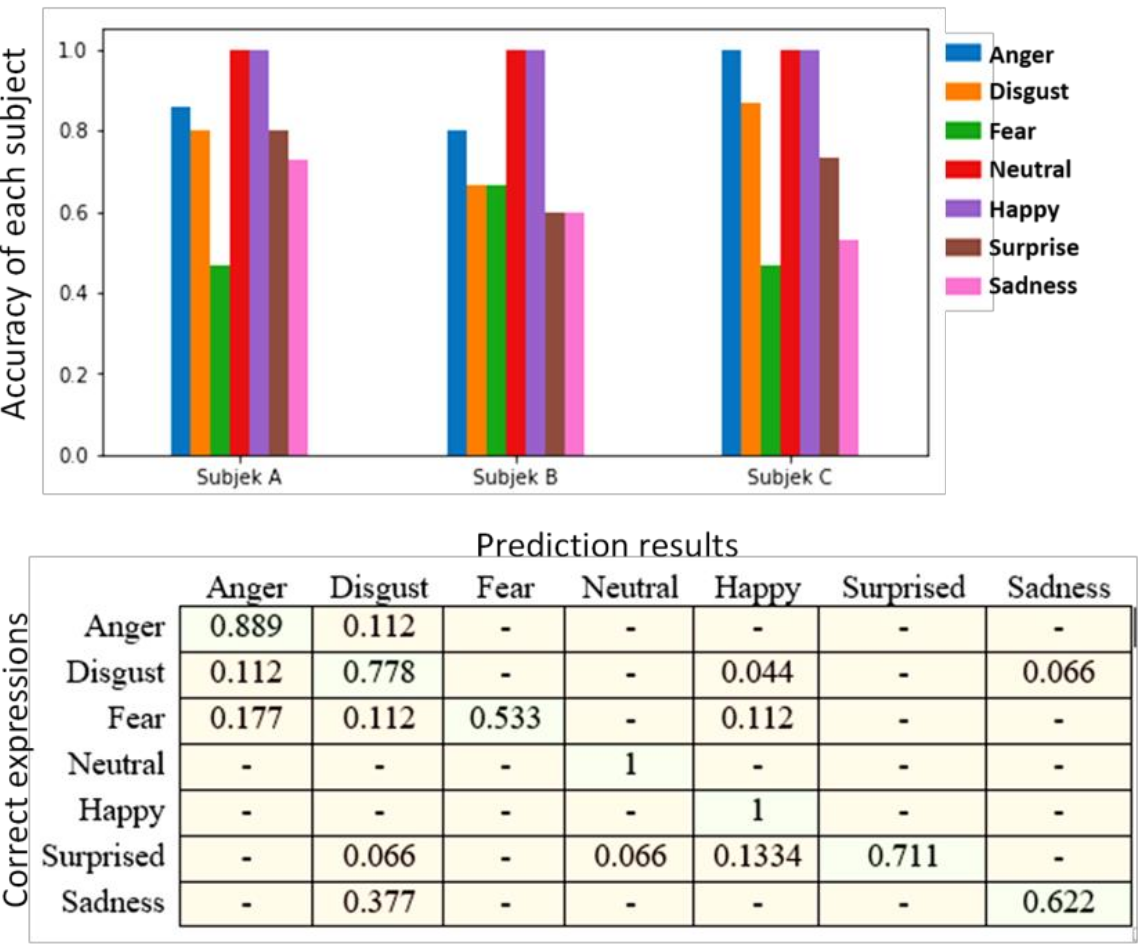

The user can guess the facial expressions correctly by 78% in an outdoor environment with no additional lighting

The user can guess the facial expressions correctly by 78% in an outdoor environment with no additional lighting

📌 Key Takeaway:

The proposed facial expression recognition system, implemented on a wearable device, successfully assisted visually impaired individuals by providing auditory feedback during social interactions, achieving meaningfull performance on both indoor and outdoor settings. Future improvements will focus on enhancing accuracy, ergonomics, and power management, including the creation of a custom dataset.

📚 Related Articles